It is difficult to believe that the first Hadoop cluster was put into production at Yahoo, 10 years ago, on January 28th, 2006. Ten years ago nobody was aware that an open source technology, like Apache Hadoop will fire a revolution in the world of big data. Ever since 2006, the craze for Hadoop is exponentially rising, making it a cornerstone technology for businesses to power world-class products and deliver the best in-class user experience. Although we might be a bit late but it is still worth wishing the poster child for big data analytics – a belated Happy Birthday! As January 28th, 2016 marks the celebration of Hadoop’s tenth birthday, Open source society malta takes the opportunity to celebrate the remarkable presence of the tiny toy elephant, in the big data community -by illuminating the milestones achieved and the momentum Hadoop gained in the last ten years.

Happy Birthday Hadoop

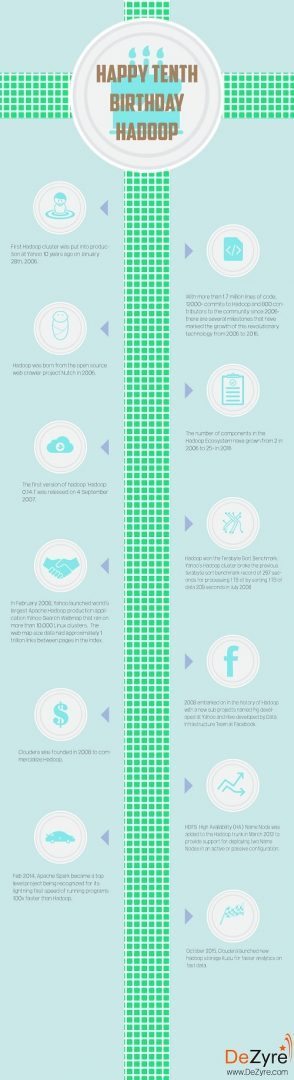

With more than 1.7 million lines of code, 12000+ commits to Hadoop and 800 contributors to the community since 2006- there are several milestones that have marked the growth of this revolutionary technology from 2006 to 2016. Hadoop has gained stardom in the IT industry because of two important factors- the tidal wave of big data and Apache open source license – making it accessible to anyone free of cost which is a huge advantage propelling the growth of Hadoop.

Hadoop has become a large community in 10 years, as, it manages everything inside the search engines right from identifying the ads to show up in the search engine, stories you see on your Yahoo homepage and the news feed Facebook pulls out based on the browsing history. Hadoop is an innovation in the big data world that has changed the face of how data is processed. Hadoop is a solution that can be used by anyone right from the IT giants like IBM and Google to small marketers for profitable business outcomes.

Hadoop in 2006- The Hardest Step

Hadoop was born from the open source web crawler project Nutch, in 2006.Doug Cutting joined Yahoo in 2006 and started a new subproject from Nutch naming it after his young son’s tiny toy elephant – Hadoop. Doug Cutting and Cafarella had only 5 machines to work with, requiring several manual steps to operate the system. It also did not have any reliability; the data was lost in case the machine was lost. In 2006, Hadoop was not really capable of handling production search workloads as it worked on only 5 to 20 nodes at the time without much performance efficiency. In 2006, it was very difficult to work with Hadoop and only people with a fierce passion for coding could try their hands on it.

It has grown in every possible way from 2006 to 2016 whether it be – the number of users, number of hadoop developers, related projects, contributors to core hadoop, total commits to hadoop, lines of code written, etc.

Milestones in the History of Hadoop from 2007-2015

The number of components in the Hadoop Ecosystem has grown from 2 in 2006 to 25+ in 2016.With several Yahoo engineers and with the use of several thousand computers in 2007, Hadoop became a reliable and comparatively stable system for processing petabytes of data, using commodity hardware. The first version of Hadoop – ‘Hadoop 0.14.1’ was released on 4 September 2007.

Hadoop became a top level Apache project in 2008 and also won the Terabyte Sort Benchmark. Yahoo’s Hadoop cluster broke the previous terabyte sort benchmark record of 297 seconds for processing 1 TB of data by sorting 1 TB of data in 209 seconds – in July 2008. This was a major milestone in the history of Hadoop as it was the first time that an open source, Java based, project had won that benchmark.

In February 2008, Yahoo launched world’s largest Apache Hadoop production application -Yahoo Search Webmap, that ran on more than 10,000 Linux clusters. The web map size data had approximately 1 trillion links between pages in the index. This was another major milestone in the history of Hadoop, as it proved that even in the early stage of development Hadoop is capable of handling web scale projects at economical cost. Today the results obtained from billions of search queries executed at Yahoo every month, completely depend on the data that is produced by Hadoop clusters.

With the intent to bring the concepts of rows, columns, partitions, tables and something SQL like in the world of Hadoop, whilst maintaining the flexibility and extensibility of Hadoop – 2008 made a mark, in the history of Hadoop, with new sub-projects named Pig – developed at Yahoo and Hive – developed by the Data Infrastructure Team at Facebook. Hive provided SQL access framework to work with Hadoop so that individuals who were not from a programming background could make the best use of the open source Hadoop. Few months later Apache Pig sub project was added to the Hadoop ecosystem, to ease programming for people who were not familiar with MapReduce concepts and to optimize execution.

With the vision to bring Hadoop and related sub-projects to traditional enterprises – Cloudera was founded in 2008 to commercialize Hadoop and help organizations develop enterprise grade big data solutions. Today, Cloudera is the leading innovator and the largest contributor in the Apache open source community. Doug Cutting joined Cloudera as a Chief Architect in 2009. To overcome the single point of failure (SPOF) problem, a new feature named HDFS High Availability (HA) Name Node was added to the Hadoop trunk in March 2012 – to provide support for deploying two Name Nodes in an active or passive configuration. To overcome the various criticisms revolving around Hadoop’s scaling limitations and job handling – YARN was developed in August 2012.

In Feb 2014, Apache Spark (dubbed – the Swiss Army Knife of Big Data) transformed from an Incubator project to a top level project at the Apache Software Foundation, recognized for its lightning fast speed of running programs – 100x faster than Hadoop and for its ease of use. To complement the already existing storage options – HBase and HDFS, Cloudera launched new Hadoop storage Kudu for faster analytics on fast data. Kudu merges the capabilities of HDFS and HBase, by providing efficient columnar scans, fast updates and inserts to support common real time use cases.

Hadoop has become the game changer since 2006 helping developers process big data faster, build better methods of page layout, advertising, spell-check, etc. With several sub-projects like Pig, Hive, HBase, Impala, Kudu and more- Hadoop, today, is and will continue to be the go-to technology for easy and affordable analysis of big data.

Ten years have passed but Yahoo still claims to have the largest Hadoop deployment in the world with over 35,000 Hadoop servers, hosted across 16 clusters, with a combined 600 petabytes of storage capacity, that execute close to 34 million compute jobs every month.

Hadoop Predictions for 2016

Gartner’s Merv Adrian said- “Despite investment in training programs, broad availability of Hadoop administration skills (value enablers) and analytics skills (value creators) is 2-3 years out. Don’t underestimate this uphill climb. Next, applications taking advantage of the Hadoop ecosystem need to emerge. To this point, the work done on Hadoop has effectively been artisanal data management—everything is built by hand. That’s fine if you’ve got the skills, but most enterprises don’t build software—they buy it. Hadoop can be a great place for those applications to reside.”

There is still a 2 to 3-year ramp up required for enterprises to speed up with Hadoop skills due to lack of expert talent. As the interest and adoption rate of traditional technologies like Oracle falls- the demand for Hadoop professionals is increasing at a highly accelerated pace. With companies like Uber and Facebook showing the glimpses of future by make the best use of Hadoop power- the future of Hadoop is bright – with many more milestones to be achieved in the next 10 years. With several companies baking Hadoop analytics into their products to benefit from it- the demand for Hadoop developers is anticipated to increase over time.

Advice for Developers interested in working with Hadoop

With the unfolding of the data century, Hadoop is a perfect fit for all tasks from machine learning to real-time data querying and analysis involving large amounts of data. All this is thanks to the big data community, the contributors, the committers and each one who has helped Hadoop grow at a rapid pace and made it the defacto standard for big data processing. As the big data trends continue in 2016, Hadoop will continue to make remarkable impact in the big data world, with many more advanced tools and enhancements to meet new challenges.

2016 is the best time to get started on learning Hadoop and building applications on it. Hadoop has come a long way in 10 years with several complementary projects and tools that form an integral part of the Hadoop ecosystem. With the combination of Hadoop and Spark leading to novel business use cases for the enterprise, at a cheaper cost of storing and processing big data – Hadoop and Spark skills will be in huge demand for the next 5 years.

Excited to see what the next 10 years has in store for Apache Hadoop?